12 KiB

UCloud云主机

账户密码 FS12345678

环境准备

Postgres

apt update

apt install postgresql postgresql-contrib

su postgres

> psql

> # alter user postgres with password 'ROOT';

vi /etc/postgresql/9.5/main/pg_hba.conf

# host all all 10.60.178.0/24 md5

service postgresql restart

createdb iOTA_console

psql -d iOTA_console < dump.sql

Docker

curl -sSL https://get.daocloud.io/docker | sh

Redis

因为redis默认端口暴露在外网环境不安全,启动ubuntu防火墙

ufw enable

ufw status

# 默认允许外部访问本机

ufw default allow

# 禁止6379端口外部访问

ufw deny 6379

# 其他一些

# 允许来自10.0.1.0/10访问本机10.8.30.117的7277端口

ufw allow proto tcp from 10.0.1.0/10 to 10.8.30.117 7277

Status: active

To Action From

-- ------ ----

6379 DENY Anywhere

6379 (v6) DENY Anywhere (v6)

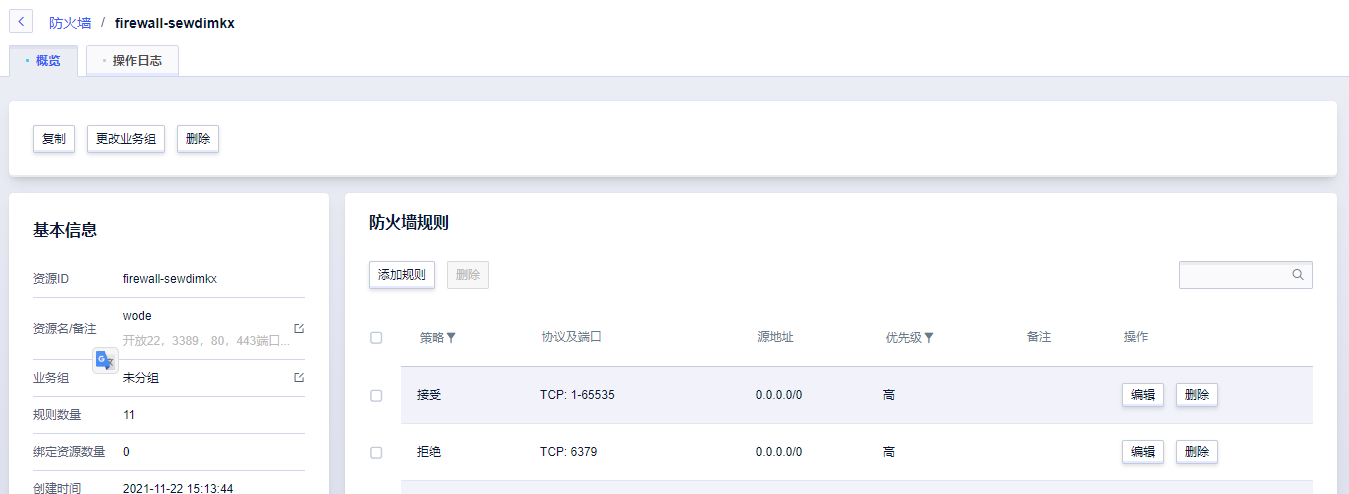

开放了防火墙,外网还是无法访问开放的端口。进入ucloud控制台,

基础网络UNet > 外网防火墙 > 创建防火墙 (自定义规则)

开放所有tcp端口,只禁用redis-6379

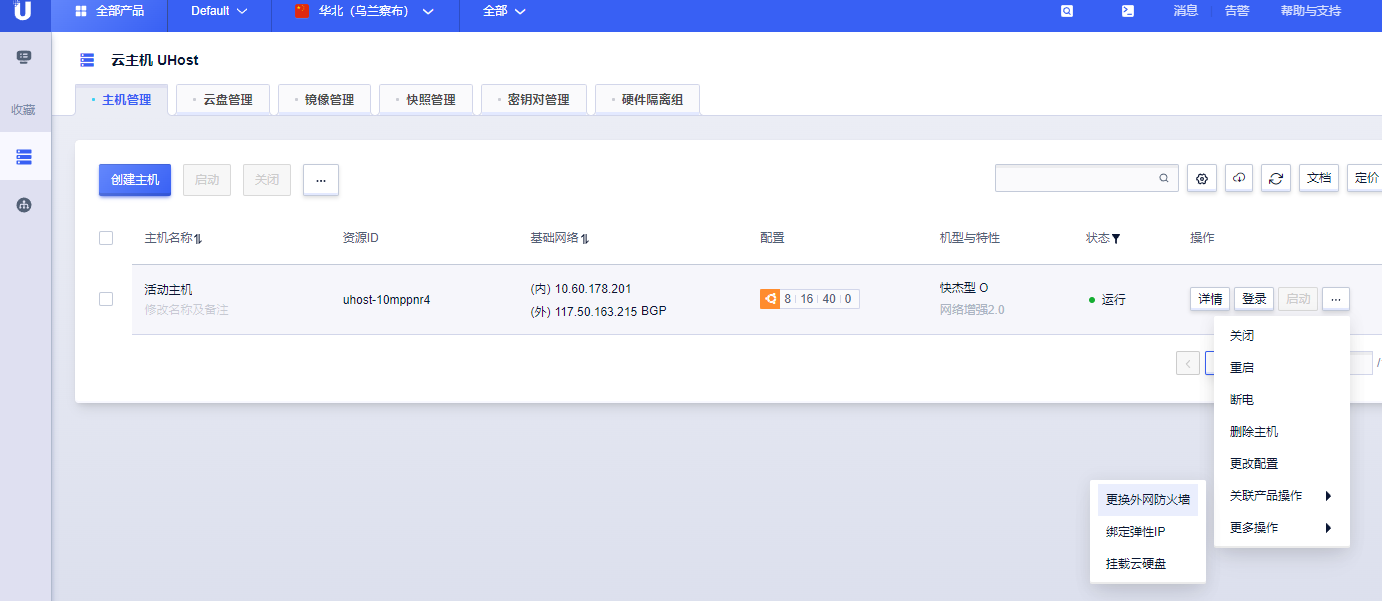

云主机UHost > 关联资源操作 > 更改外网防火墙

安装redis

apt update

apt install redis-server

引流测试

机房搬迁,准备在云上运行单实例dac进行数据采集。

准备工作:进行线上引流测试。不影响商用dac的采集,准备如下:

- proxy上被动连接转发到UCloud。

- 流单向复制。设备 -> proxy -> DAC通路, 开路:DAC->proxy-|->设备。

- 主动连接

- mqtt、http主动连接第三方服务器的,

- mqtt 的clientid添加后缀

- 截断driver的写入

关键代码

// io.copy无法多次执行

// 如果配置了OutTarget,则进行本地复制到同时向外复制流

func Pipeout(conn1, conn2 net.Conn, port string, wg *sync.WaitGroup, reg []byte) {

if OutTarget != "" {

tt := fmt.Sprintf("%s:%s", OutTarget, port)

tw := NewTeeWriter(tt, reg)

tw.Start()

if _, err := io.Copy(tw, io.TeeReader(conn2 /*read*/, conn1 /*write*/)); err != nil {

log.Error("pipeout error: %v", err)

}

tw.Close()

} else {

io.Copy(conn1, conn2)

}

conn1.Close()

log.Info("[tcp] close the connect at local:%s and remote:%s", conn1.LocalAddr().String(), conn1.RemoteAddr().String())

wg.Done()

}

// 引流写入器

type TeeWriter struct {

target string // 转发目标地址

conn net.Conn // 转发连接

isConnect bool // 是否连接

exitCh chan interface{} // 退出

registry []byte

}

func NewTeeWriter(target string, reg []byte) *TeeWriter {

return &TeeWriter{

target: target,

exitCh: make(chan interface{}),

registry: reg,

}

}

func (w *TeeWriter) Start() error {

go w.keep_connect()

return nil

}

func (w *TeeWriter) Close() error {

close(w.exitCh)

return nil

}

func (w *TeeWriter) Write(p []byte) (n int, err error) {

defer func() {

if err := recover(); err != nil {

log.Error("teewrite failed %s", w.target)

}

}()

if w.isConnect {

go w.conn.Write(p)

}

// 此方法永远不报错

return len(p), nil

}

func (w *TeeWriter) keep_connect() {

defer func() {

if err := recover(); err != nil {

log.Error("teewrite keep connect error: %v", err)

}

}()

for {

if cont := func() bool {

var err error

w.conn, err = net.Dial("tcp", w.target)

if err != nil {

select {

case <-time.After(time.Second):

return true

case <-w.exitCh:

return false

}

}

w.isConnect = true

defer func() {

w.isConnect = false

}()

defer w.conn.Close()

if w.registry != nil {

_, err := w.conn.Write(w.registry)

if err != nil {

return true

}

}

if err := w.conn.(*net.TCPConn).SetKeepAlive(true); err != nil {

return true

}

if err := w.conn.(*net.TCPConn).SetKeepAlivePeriod(30 * time.Second); err != nil {

return true

}

connLostCh := make(chan interface{})

defer close(connLostCh)

// 检查远端bconn连接

go func() {

defer func() {

log.Info("bconn check exit")

recover() // write to closed channel

}()

one := make([]byte, 1)

for {

if _, err := w.conn.Read(one); err != nil {

log.Info("bconn disconnected")

connLostCh <- err

return

}

time.Sleep(time.Second)

}

}()

select {

case <-connLostCh:

time.Sleep(10 * time.Second)

return true

case <-w.exitCh:

return false

}

}(); !cont {

break

} else {

time.Sleep(time.Second)

}

}

}

引流测试未执行。。。

DAC线上测试

配置如下

需要配置 url.maps.json

"47.106.112.113:1883"

"47.104.249.223:1883"

"mqtt.starwsn.com:1883"

"test.tdzntech.com:1883"

"mqtt.tdzntech.com:1883"

"s1.cn.mqtt.theiota.cn:8883"

"mqtt.datahub.anxinyun.cn:1883"

"218.3.126.49:3883"

"221.230.55.28:1883"

"anxin-m1:1883"

"10.8.25.201:8883"

"10.8.25.231:1883"

"iota-m1:1883"

以下数据无法获取:

-

gnss数据

http.get error: Get "http://10.8.25.254:7005/gnss/6542/data?startTime=1575443410000&endTime=1637628026000": dial tcp 10.8.25.254:7005: i/o timeout

-

时

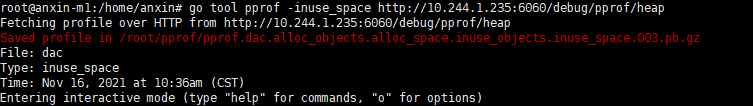

DAC内存问题排查

文档整理不够清晰,可以参考 https://www.cnblogs.com/gao88/p/9849819.html

pprof的使用:

查看进程内存消耗:

top -c

# shift+M

top - 09:26:25 up 1308 days, 15:32, 2 users, load average: 3.14, 3.70, 4.37

Tasks: 582 total, 1 running, 581 sleeping, 0 stopped, 0 zombie

%Cpu(s): 5.7 us, 1.5 sy, 0.0 ni, 92.1 id, 0.0 wa, 0.0 hi, 0.8 si, 0.0 st

KiB Mem : 41147560 total, 319216 free, 34545608 used, 6282736 buff/cache

KiB Swap: 0 total, 0 free, 0 used. 9398588 avail Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

18884 root 20 0 11.238g 0.010t 11720 S 48.8 26.7 39:52.43 ./dac

发现dac内存咱用超10G

查看所在容器:

root@iota-n3:/home/iota/etwatcher# systemd-cgls | grep 18884

│ │ ├─32574 grep --color=auto 18884

│ │ └─18884 ./dac

for i in $(docker container ls --format "{{.ID}}"); do docker inspect -f '{{.State.Pid}} {{.Name}}' $i; done | grep 18884

定位到 dac-2

查看指定容器的pid可以使用“

docker top container_id

获取所有容器的PID

for l in `docker ps -q`;do docker top $l|awk -v dn="$l" 'NR>1 {print dn " PID is " $2}';done通过docker inspect方式

docker inspect --format "{{.State.Pid}}" container_id/name

查看dac-2容器信息

root@iota-n3:~# docker ps | grep dac-2

05b04c4667bc repository.anxinyun.cn/iota/dac "./dac" 2 hours ago Up 2 hours k8s_iota-dac_iota-dac-2_iota_d9879026-465b-11ec-ad00-c81f66cfe365_1

be5682a82cda theiota.store/iota/filebeat "filebeat -e" 4 hours ago Up 4 hours k8s_iota-filebeat_iota-dac-2_iota_d9879026-465b-11ec-ad00-c81f66cfe365_0

f23499bc5c22 gcr.io/google_containers/pause-amd64:3.0 "/pause" 4 hours ago Up 4 hours k8s_POD_iota-dac-2_iota_d9879026-465b-11ec-ad00-c81f66cfe365_0

c5bcbf648268 repository.anxinyun.cn/iota/dac "./dac" 6 days ago Up 6 days k8s_iota-dac_iota-dac-2_iota_2364cf27-41a0-11ec-ad00-c81f66cfe365_0

有两个?(另外一个僵尸进程先不管)

进入容器:

docker exec -it 05b04c4667bc /bin/ash

容器里没有 curl命令?

使用 wget -q -O - https://www.baidu.com 直接输出返回结果

在宿主机:

go tool pprof -inuse_space http://10.244.1.235:6060/debug/pprof/heap

# top 查看当前内存占用top10

(pprof) top

Showing nodes accounting for 913.11MB, 85.77% of 1064.60MB total

Dropped 215 nodes (cum <= 5.32MB)

Showing top 10 nodes out of 109

flat flat% sum% cum cum%

534.20MB 50.18% 50.18% 534.20MB 50.18% runtime.malg

95.68MB 8.99% 59.17% 95.68MB 8.99% iota/vendor/github.com/yuin/gopher-lua.newLTable

61.91MB 5.82% 64.98% 90.47MB 8.50% iota/vendor/github.com/yuin/gopher-lua.newFuncContext

50.23MB 4.72% 69.70% 50.23MB 4.72% iota/vendor/github.com/yuin/gopher-lua.newRegistry

34.52MB 3.24% 72.94% 34.52MB 3.24% iota/vendor/github.com/yuin/gopher-lua.(*LTable).RawSetString

33MB 3.10% 76.04% 33MB 3.10% iota/vendor/github.com/eclipse/paho%2emqtt%2egolang.outgoing

31MB 2.91% 78.95% 31MB 2.91% iota/vendor/github.com/eclipse/paho%2emqtt%2egolang.errorWatch

31MB 2.91% 81.87% 31MB 2.91% iota/vendor/github.com/eclipse/paho%2emqtt%2egolang.keepalive

27.06MB 2.54% 84.41% 27.06MB 2.54% iota/vendor/github.com/yuin/gopher-lua.newFunctionProto (inline)

14.50MB 1.36% 85.77% 14.50MB 1.36% iota/vendor/github.com/eclipse/paho%2emqtt%2egolang.alllogic

列出消耗最大的部分 top

列出函数代码以及对应的取样数据 list

汇编代码以及对应的取样数据 disasm

web命令生成svg图

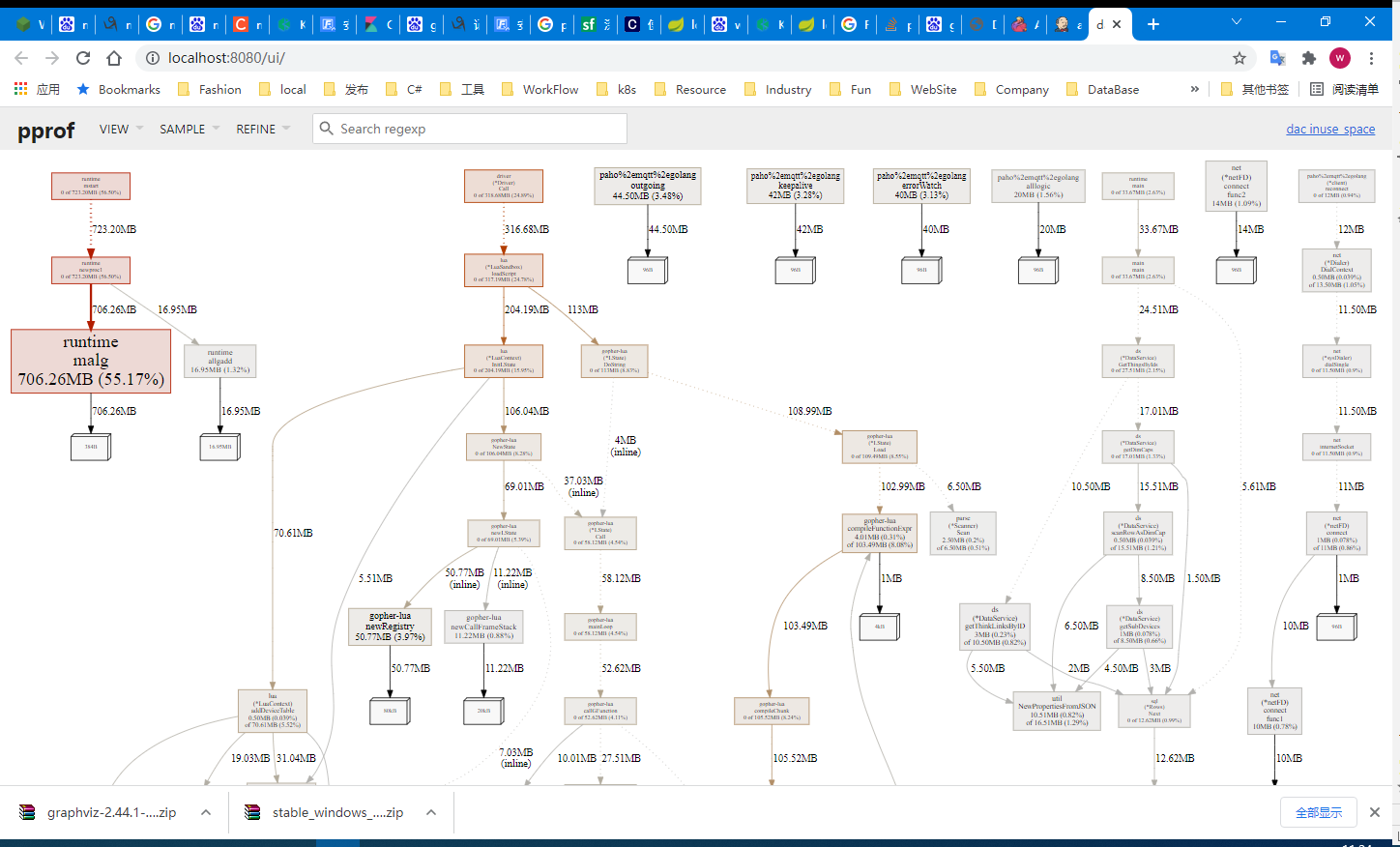

在服务器上执行go tool pprof后生成profile文件,拷贝到本机windows机器,执行

安装 graphviz

https://graphviz.gitlab.io/_pages/Download/Download_windows.html

下载zip解压配置系统环境变量

C:\Users\yww08>dot -version dot - graphviz version 2.45.20200701.0038 (20200701.0038) There is no layout engine support for "dot" Perhaps "dot -c" needs to be run (with installer's privileges) to register the plugins?

执行dot初始化 dot -c

本机执行pprof

go tool pprof --http=:8080 pprof.dac.alloc_objects.alloc_space.inuse_objects.inuse_space.003.pb.gz

内存的占用主要集中在:

runtime malg

去搜寻了大量资料之后,发现go的官网早就有这个issue(官方issue),大佬们知道,只是不好解决,描述如下: Your observation is correct. Currently the runtime never frees the g objects created for goroutines, though it does reuse them. The main reason for this is that the scheduler often manipulates g pointers without write barriers (a lot of scheduler code runs without a P, and hence cannot have write barriers), and this makes it very hard to determine when a g can be garbage collected.

大致原因就是go的gc采用的是并发垃圾回收,调度器在操作协程指针的时候不使用写屏障(可以看看draveness大佬的分析),因为调度器在很多执行的时候需要使用P(GPM),因此不能使用写屏障,所以调度器很难确定一个协程是否可以当成垃圾回收,这样调度器里的协程指针信息就会泄露。 ———————————————— 版权声明:本文为CSDN博主「wuyuhao13579」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。 原文链接:https://blog.csdn.net/wuyuhao13579/article/details/109079570

找进程的日志:

发现出问题的DAC日志重复出现

Loss connection

这是DAC代码中mqtt断连的时候触发的日志。查看源码:

func (d *Mqtt) Connect() (err error) {

//TODO not safe

d.setConnStat(statInit)

//decode

//set opts

opts := pahomqtt.NewClientOptions().AddBroker(d.config.URL)

opts.SetClientID(d.config.ClientID)

opts.SetCleanSession(d.config.CleanSessionFlag)

opts.SetKeepAlive(time.Second * time.Duration(d.config.KeepAlive)) // 30s

opts.SetPingTimeout(time.Second * time.Duration(d.config.KeepAlive*2))

opts.SetConnectionLostHandler(func(c pahomqtt.Client, err error) {

// mqtt连接掉线时的回调函数

log.Debug("[Mqtt] Loss connection, %s %v", err, d.config)

d.terminateFlag <- true

//d.Reconnect()

})

}

对象存储(OSS)

阿里云 OSS基础概念 https://help.aliyun.com/document_detail/31827.html